Using AI to judge student writing “has the potential to revolutionise assessment and decimate workload”, according to the findings of a trial of the approach.

No More Marking, an organisation that pioneers comparative judgment as an alternative to marking written work, recently ran an AI assessment project called CJ Lightning. Comparative judgment involves deciding which is better out of two pieces of writing.

In a blog post, director of education Daisy Christodoulou and founder Chris Wheadon said the results showed AI “is very good at judging student writing and is a viable and time-saving alternative for many forms of school assessment”.

It comes as the government is pushing to use AI and other technology to cut teacher workload.

Another study last year suggested teachers who use ChatGPT alongside a guide on using it effectively can reduce lesson planning time by 31 per cent.

The CJ Lightning project assessed the writing of 5,251 year 7 students from 44 secondary schools.

Pupils wrote a non-fiction response to a short text prompt about improving the environment.

Teachers uploaded their writing to the No More Marking website and then used comparative judgment to assess it.

The process “typically delivers very high levels of inter-rater reliability, and is the gold standard of human judgment”.

AI agreed with 81% of human decisions

In this project, No More Marking asked AI to make judgments too. This allowed them to compare judgments made by humans and AI to see if they agreed.

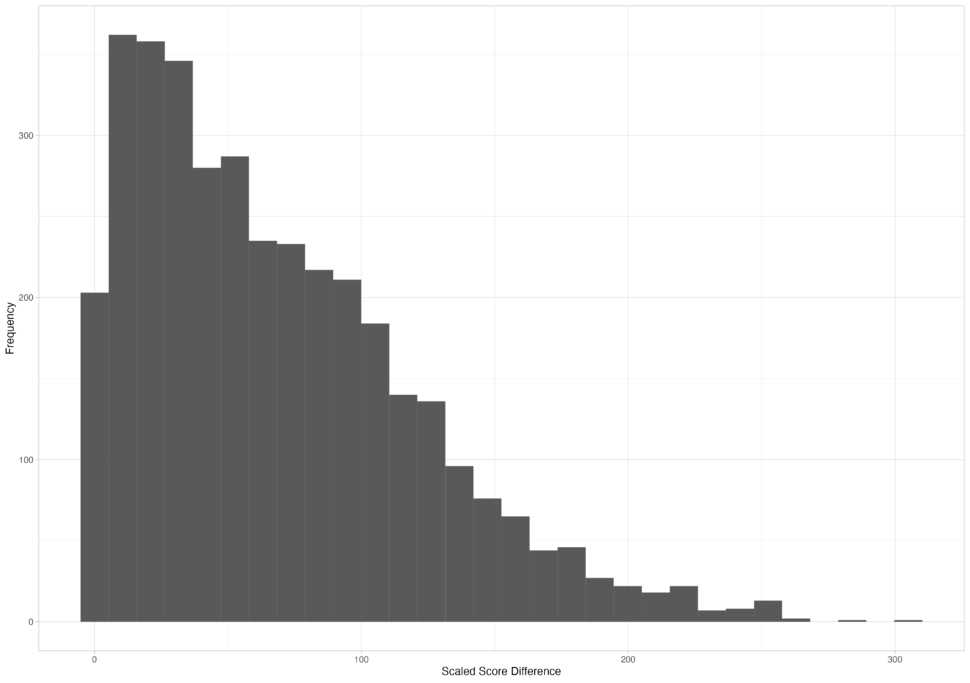

Of the 3,640 decisions made by humans, the AI agreed with 81 per cent of them.

During NMM’s most recent previous human-judged year 7 assessment, the human judges agreed with each other 87 per cent of the time, which is “fairly typical”.

But they said total levels of disagreement were “not conclusive” and the “type of error matters.

“The overall agreement can be good, but if the 20 per cent of disagreements are full of absolute howlers, that’s still a huge problem.”

“Reassuringly” in this trial, those disagreements “peak where the scaled score difference is small”.

Disagreements sometimes down to human error

NMM scrutinised a sample of the biggest disagreements in detail, and talked to teachers who made some of the decisions.

“They are not cases where the AI is wrong and the human is right. In fact, some of the biggest disagreements involved teachers being biased by handwriting, and accepting on review that the AI was probably right and they were wrong”.

Other examples “involved teachers making a manual error and clicking the wrong button”.

They also compared the tests of 2,297 of the pupils who took part in a similar assessment in September last year and in this project.

The correlation of scores between the two sessions was 0.65. NMM said they had seen a correlation of 0.58 between human tests in May and September last year.

“The high correlation reassures us that the AI is not judging on some strange dimension of writing ability, but is actually providing us with a similar dimension to the one we value,” wrote Christodoulou and Wheadon.

Not just ‘asking AI for a mark’

They added that their approach to AI assessment was “very different to the ‘ask an AI for a mark’ approach, and offers far more assurances that you are getting the right grade”.

This is because AI, like humans, is better at comparative judgments than absolute ones. They also got the AI to make every decision twice to “eliminate its tendency to position bias”.

Christodoulou and Wheadon also “think that you could run a 100 per cent AI judged assessment with no human judging.

“However, we would not recommend that you routinely do this. You would always want to run some human-AI hybrids to a) keep validating the AI model and b) make sure that teachers are engaging with student writing.”

In this assessment, they recommend a split of 10 per cent human judgment and 90 per cent AI.

Teachers could save time

In one school with 269 year 7s, a head of department spent an hour and 12 minutes on the assessment.

That was “enough to validate all the other AI decisions and provide robust and meaningful scores for every student”.

“In other schools, they shared the decisions out amongst lots of teachers, resulting in 5-10 minutes of judging per teacher.”

Christodoulou and Wheadon concluded that they “still think [AI technologies] have flaws and are prone to hallucinations”.

“But we think the process we’ve developed here has the potential to revolutionise assessment and decimate workload (quite literally decimate if you follow our recommended 10 per cent human judging approach).”

NMM will run free projects in the summer term for any primary or secondary school wanting to trial the approach.

They will then have a “more comprehensive plan available in academic year 2025-26”.

Your thoughts