The government can’t say whether schools taking part in an £850,000 edtech support scheme improved because of the help they received or because the wider sector became more “confident” with technology.

An evaluation of the second year of the edtech demonstrator programme found “no statistical difference” in results between schools that received support in four of the five areas and those that did not.

In fact, schools that received help in most of the specific areas that formed part of the £850,000 scheme actually improved less than those that did not receive support with the same thing.

The Department for Education has published an evaluation by the Government Social Research department of the second year of the scheme, which was run by the United Church Schools Trust (UCST), the sponsor of United Learning.

The scheme offered support with technology to help with five areas – education recovery, teacher workload, school improvement, resource management and curriculum inclusivity and accessibility. It was scrapped after its second year.

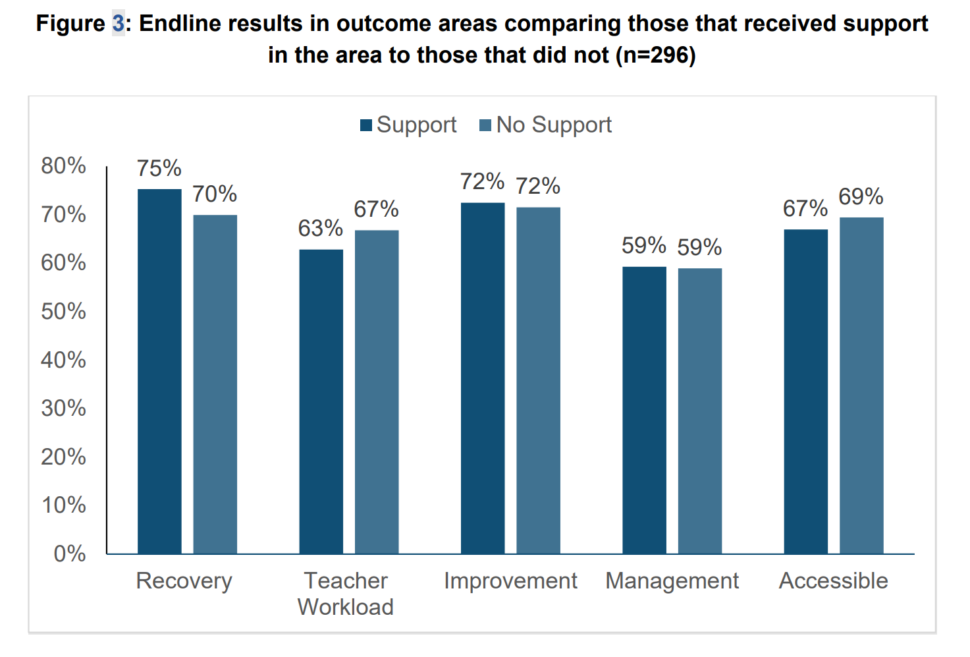

The evaluation, which used 296 survey responses to score participating schools in the five areas by percentage before and after the scheme, showed settings that received support saw their scores increase across the board.

Schools improved in areas where they didn’t get support

But the DfE said it saw “similar improvements” that were “also statistically significant” among schools that did not receive support in the same specific outcome areas.

“This means that schools and colleges have made progress across the board, not just in the areas that they received support in.”

The department said there were “two potential interpretations”.

The first is that schools made progress during the programme even for areas where they did not receive support, for example because demonstrators provided support in more than one area, or because support “spilled over” into other areas.

The second is that schools made progress “regardless of taking part in the programme”, for example as a result of the sector nationally becoming “more confident” in using technology.

“We also looked at the different results for those that were recorded to receive support in a specific area versus those that did not.

“We observed that there was no statistical difference in endline scores between the two groups (except for in one area) which is in line with the above finding that progress was made across outcome areas whether they were recorded to receive support in that area or not.”

The DfE said its analysis showed the “support provided by demonstrators often did not fall neatly into individual outcome areas, with fluidity around the type and amount of support provided”.

A spokesperson for United Learning said it was “encouraging to see such significant changes across hundreds of schools in all the outcome domains from a relatively inexpensive programme”.

Outcomes similar for schools regardless of support

The evaluation shows that schools that did not receive the same support ended up with the same or higher outcomes in all areas except recovery.

Schools that received support with education recovery saw their scores increase by 11.9 per cent. Those that did not receive support saw scores rise by 14.5 per cent.

Support with teacher workload saw schools’ scores rise by 19.2 per cent, but there was an even greater rise of 23.8 per cent among schools that didn’t get support.

Again, on school improvement, institutions receiving support showed a 21.7 per cent increase in scores, compared to 23.3 per cent of those not receiving support.

Support on making the curriculum accessible and inclusive led to scores increasing by 21.6 per cent, but those without support saw an increase of 22.7 per cent.

The only area where schools improved more with support than without it was in resource management, at 22 per cent vs 12.2 per cent.

Scheme helped schools ‘accelerate’ tech changes

The evaluation did hear positive feedback from participating schools, however. Most of those interviewed said changes made to how they used technology had been “accelerated” through participation in the programme.

Settings also saw the demonstrator school they were paired with as a “critical friend or mentor, who was able to steer and advise them in technology use in an accessible and supportive way”.

However, the “main challenge” was a concern among participants about their “ability to embed or further build on the support they had received through the programme at a

school/college level”.

This was due to “difficulties in prioritising involvement in the programme or maintaining momentum, lacking the internal infrastructure or capacity to fully implement or move forward with some of the support provided, and staff willingness to adapt to new practices or use of technology”.

Your thoughts