Education has been sold a hype-filled edtech dream, now with added ‘AI’. It’s a thriving market, but it’s one where schools are essentially unable to make informed and ethical choices – with potentially dire consequences for young people.

Edtech is not regulated in the same way as, say, playground apparatus or food. This is not to say that all edtech is nefarious, but all edtech is designed for profit, and I have yet to see any that is 100-per cent privacy-respecting or secure.

I would love to see some exceptions. I would also love to see the information commissioner’s office (ICO) engage in more enforcement. Because it should not be down to schools to get ‘security and privacy by demand’; they should be getting it by design.

In the private sector, compliance teams demand clear responses from vendors on security or privacy issues. In education, procurement is less rigorous.

Vendors find it easier to obfuscate and bamboozle with National Cyber Security Centre or generic AI governance checklists. All it takes is for a school IT team and GDPR consultant to wave things through.

I have seen this as a school data protection officer and as a parent; schools defer to consultants for advice and the advice is often sadly incorrect.

Once schools pay or sign up for the ‘free trial’, they usually discover that the promised innovation is missing. And that’s because the end product of these companies – where their money comes from regardless of their other intentions – is not learning; it is children’s data.

This data can be used to help pupils make academic progress. But it can also be used for more questionable ends. Have we really asked ourselves how these ends reflect our values, and where we draw our boundaries?

The truth is that we haven’t, and those boundaries are being drawn up without broad societal consent. And I’m not just talking about the normalisation of dubious practices in schools like online proctoring, biometrics in canteens and CCTV in bathrooms.

That free-to-use app is likely using tracking pixels that could well be sharing children’s data with third parties like advertisers, law enforcement or other interested parties.

Meta, Google and Bytedance (owner of TikTok) are the main users of this technology. It is present on nearly one-third of websites globally, and it should never be embedded into technology for children.

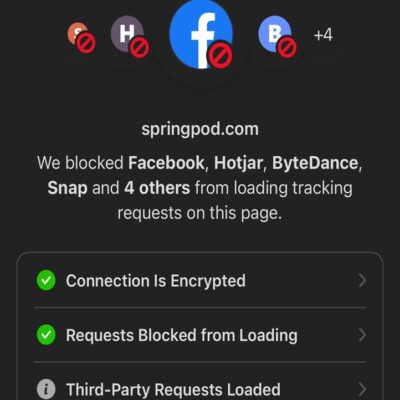

And yet. Free, privacy-respecting search engines like Brave, Privacy Badger or Duckduckgo all provide instant reports on trackers. Here is one such report on the popular work experience website, Springpod.

So while we are nominally teaching children and young people to be careful online, we are in fact – often unwittingly, sometimes because we’ve been deceived – creating huge digital footprints for them.

And all this when we know that education is a high-risk target for cybercriminals. More than half of primaries and over two-thirds of secondaries identified a breach in the past year.

Establishing effective edtech governance is challenging. I wish I could point you to an ICO checklist and tell you to just work through it, but even that would not guarantee safety in this wild west.

Having said that, there are some best bets:

Consent as standard

Whatever you choose, everyone should have the option to opt out at any time.

Bare necessities

Once stolen, biometric data cannot be changed. For any data collection or processing, always ask yourself: Do I really need to do this and should I keep it? The same goes for CCTV in bathrooms.

Safety in numbers

Work with other schools and use your collective power to force better security and privacy from providers.

In-house expertise

Try to recruit privacy and cybersecurity specialists onto your trustee or governance teams, or ask parents with expertise to consult on edtech procurement.

There’s another important dimension to expertise too. If you didn’t grow up poor, harassed by police or discriminated against, it can be hard to imagine how technology might be used against you.

I can think of no stronger argument to listen to listen to your community – nor indeed to get serious about this issue.

Your thoughts