AI is becoming more widely used as the technology increases in sophistication and useability. For schools, AI has potentially significant applications: tailored learning journeys, reduced teacher workload, and the opportunity for greater pupil autonomy, engagement and progress.

As the DfE has acknowledged, AI has the power to transform the sector, helping reduce unnecessary workload for teachers and allow them to focus on delivering the best possible education for their pupils.

As we stand at this technological crossroads, the most important question isn’t whether we should use AI, but how we can do so safely, ethically and effectively.

AI promises to remain a prominent topic for the foreseeable future, and I believe the best approach we can take is to embrace this ever-evolving technology.

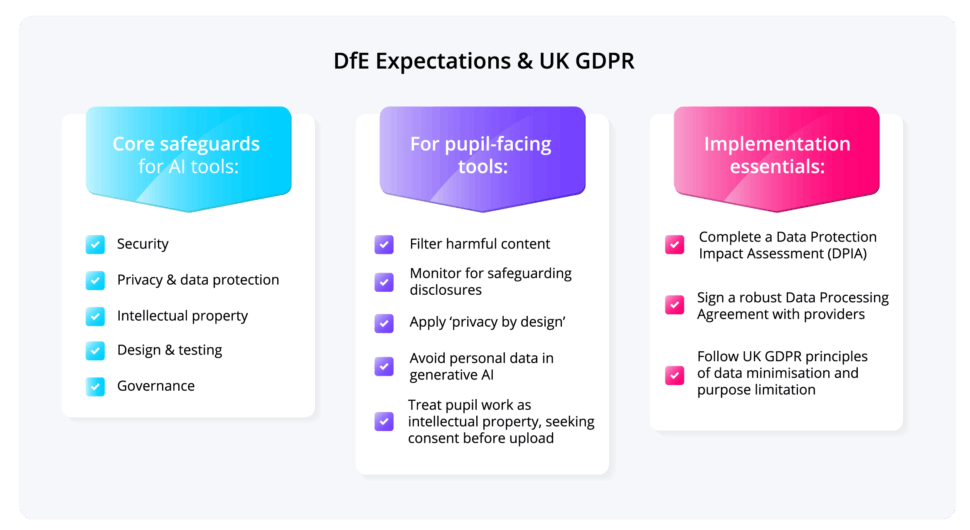

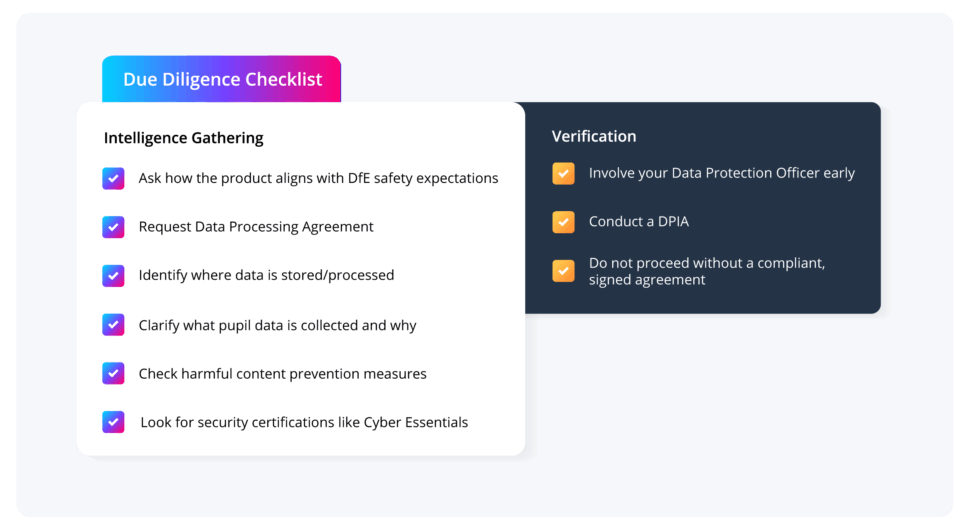

It’s time to go beyond the hype and establish a robust framework for AI adoption in our settings with safety at its heart, directly aligning with both the DfE’s expectations and our goal of providing pupils with a consistent, secure, impactful education.

Safety is the bedrock of AI in education

Safety is paramount in schools, and digital approaches must match safeguarding standards. Pupils, lacking critical skills to spot bias or protect personal data, rely on educators for guidance.

Schools handle sensitive information – academic performance, SEND records, behavioural data – where misuse can harm wellbeing and future prospects. Generative AI adds complexity, creating new content that, without guardrails, may be inaccurate, biased or inappropriate.

If an AI tool isn’t safe, its benefits are irrelevant – we must take a safety-first approach.

Balancing innovation with safeguarding

The DfE champions a ‘human-in-the-loop’ philosophy – learning must remain teacher-led. AI can help draft lessons or letters, but teachers must review and own final outputs. Pupils should be taught to safeguard themselves, evaluate answers critically, and cross-reference information.

Prioritise pedagogy over technology. Create a formal AI strategy linked to your School Improvement Plan and invest in CPD – the most important safeguard is an informed human user.

The future of AI regulation

The regulatory landscape is evolving. We may soon see an ‘EdTech Kitemark’ for AI, increased demand for algorithmic explainability, and audits to assess bias. Safe, well-informed adoption will ensure AI benefits everyone in your setting.

The National College’s approach to AI

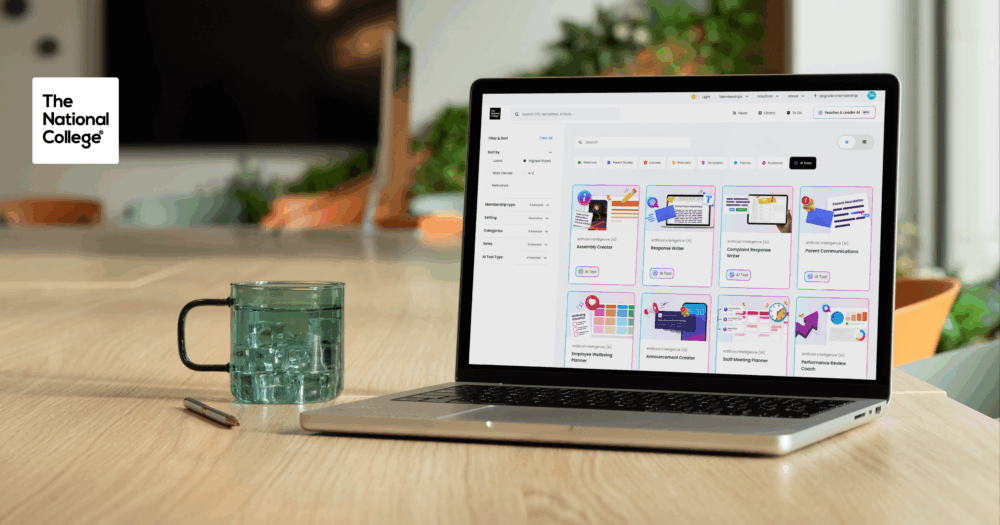

It’s been a pleasure to work with The National College on their leader and teacher AI tools. What has been most encouraging for me is their ‘safety-first’ approach. Their AI tools have been carefully built, using a wealth of data taken from their thousands of hours of webinars and other excellent resources.

The platform is built on Microsoft Azure, which has a high level of built-in data security. This, coupled with their longstanding commitment to data protection, makes this one of the most robust, secure and reliable toolkits available.

Teacher & Leader AI is included with any membership of The National College – I highly recommend you explore Teacher and Leader AI here.

Your thoughts