Grammar school pupils are not making as much progress as official figures suggest, according to new research into the government’s Progress 8 measure.

Tom Perry, a research fellow from the University of Birmingham, and research manager at the Centre for the Use of Evidence in Education, has written a new academic paper on the Progress measures which finds that the measure favours grammar schools – but his findings offers a solution to fix the bias.

Perry’s eye opening findings suggest that the average Progress 8 score for selective schools in 2017 should have been just -0.04, a massive drop compared to the figure of 0.45 calculated by the government. Schools Week looked into how it would work.

How it works

Progress 8 was created as the headline indicator of school performance in 2016, and is used to determine whether a school is above the floor standard or “coasting”.

It looks at how much progress pupils make on the new Attainment 8 measure compared to pupils with the same starting point, recorded at the end of primary school.

For the 2017 exam series, the Attainment 8 measure was calculated using pupils best 8 exam grades including English and maths and a new points system in which pupils jumping from a grade B to an A are awarded 1.5 extra points, while the difference between a G grade and F is just 0.5. All other grades are separated by a score of one.

The Progress 8 score is a “reflection of relative differences in Attainment 8 performance, compared with pupils with the same prior attainment”, Perry told Schools Week.

Researchers have found that making like for like comparisons between pupils with the same starting point was not enough to level the playing field for schools with different intake abilities.

Perry’s research uses estimates of measure reliability from Ofqual and National Pupil Database data to estimate how serious this problem is for the Progress measures.

He also shows that it can be largely fixed by adjusting the Progress 8 scores to compare school performance to other schools with the same average prior attainment, as well as other pupils with the same prior attainment.

This avoids the “compositional effect”, where pupil attainment is associated with the characteristics of their peers more than their own. For example, grammar school pupils tend to outperform pupils of similar ability at other types of schools where the ability range is more mixed.

Perry’s research shows that the compositional effects we see in the data are likely to be almost entirely due to measurement error. Adjustments counter the impact of errors in measuring key stage two scores (based on reliability estimates from Ofsted), which can result in pupils appearing to have made more or less progress by key stage 4 than they actually

have in reality.

Perry adjusted this year’s revised GCSE results for 2016-17 using his new methodology, and found that the Progress 8 scores changed significantly.

The impact on grammar schools

According to the DfE there were 163 selective schools with 22,715 pupils at the end of key stage 4 in the 2017 exam series.

The average Progress 8 score for these schools given by the government was 0.45, but after Perry’s adjustments the average score for selective schools fell to -0.04.

On average, the scores of grammar schools changed by -0.5, with the minimum change at -0.16 and the maximum at -0.76.

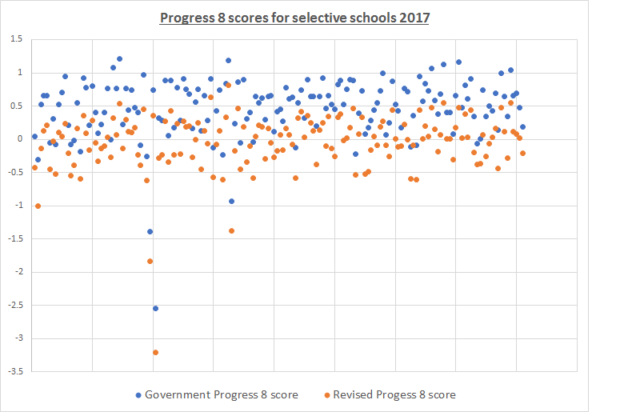

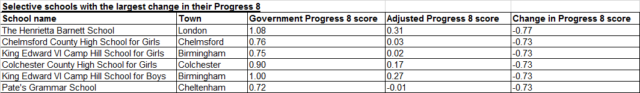

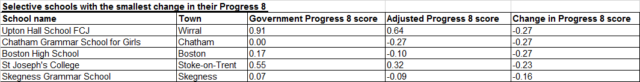

The graph below shows how the Progress 8 scores for grammar schools is altered following Perry’s adjustment, and the tables give a sample of individual changes.

Reflecting on the findings

Dave Thomson, chief statistician at FFT, which funds Education Datalab, has also carried out research on Progress 8, including providing advice to the government.

He agreed with Perry that “school intake should be taken into account when producing measures of school performance”.

“The research is really interesting,” he said. “We don’t really know a great deal about the margin of error around key stage two results and how that varies by attainment. It ought to be given more attention.”

Progress 8 is currently “just a descriptive statistic”, he added.

“It’s the Attainment 8 score with the effect of observed key stage 2 prior attainment sucked out of it. You sometimes hear within DfE that they use it as a measure of school effectiveness and that’s where it becomes problematic.

“If you start using it as a measure of school effectiveness then you do need to account for all the additional problems and factors that affect pupil attainment that we know about.”

Professor Stephen Gorard, who has worked extensively on the use of value-added measures such as Progress 8, said the findings “confirm a whole range of work that has been going on”.

“The idea was that raw scores can’t be used to rank schools. Grammar schools are selecting children who they think will do well: the raw score doesn’t mean that the schools have necessarily made those children do better than they would have done if they had gone elsewhere.

“Value-added was meant to get around that, but I don’t think it works, or has ever worked. It is zero-sum, so it doesn’t really measure progress because it is all relative. In order to succeed as a school pupil, in value added terms, others have to be seen to fail.”

Progress 8 is “really, really flaky”, he added. “It’s just another version of value-added. It varies enormously between schools from year to year in a way that raw scores don’t, if it was a genuine characteristic of what is going on in schools you wouldn’t expect that much change.”

Professor Gorard said that while it is not fair to use raw scores to compare school performance, we shouldn’t use a measure that is inaccurate just because people want a way to gauge performance.

“What needs to be done is more research on how exactly we do it,” he claimed.

From the abstract: “Pupils at academically selective schools, for example, tend to out-perform similar-ability pupils who are educated with mixed-ability peers”

I’m no statistician, but doesn’t the above statement explain simply why P8 can be larger for selective schools?

Perhaps he meant ‘out-score’ or out-attain’.